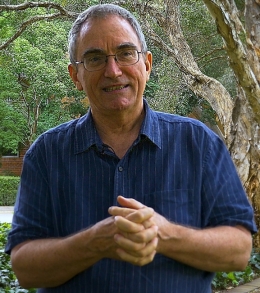

On a daily basis, tens of millions of people around the world are depending on smart speakers and their voice recognition software to find music, interact with the internet of things and play games. When we think about Amazon’s Echo speakers capturing commands for Alexa, its digital assistant in the cloud, we often overlook the role that humans play in the process. But we should think carefully before bringing smart speakers into our homes, says David Vaile, Stream Lead for Data Protection and Surveillance, Allens Hub for Technology, Law & Innovation at UNSW Law.

On a daily basis, tens of millions of people around the world are depending on smart speakers and their voice recognition software to find music, interact with the internet of things and play games. When we think about Amazon’s Echo speakers capturing commands for Alexa, its digital assistant in the cloud, we often overlook the role that humans play in the process. But we should think carefully before bringing smart speakers into our homes, says David Vaile, Stream Lead for Data Protection and Surveillance, Allens Hub for Technology, Law & Innovation at UNSW Law.