Powered by Pascal Architecture, Tesla P100 Delivers Massive Leap in Data Center Throughput

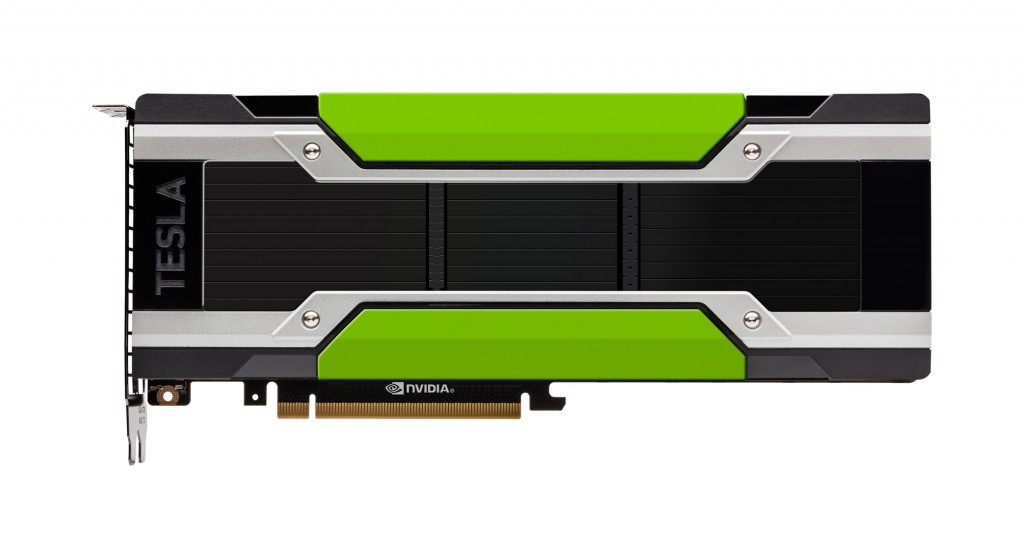

Mumbai, India—June 20, 2016—To meet the unprecedented computational demands placed on modern data centers, NVIDIA today introduced the NVIDIA® Tesla® P100 GPU accelerator for PCIe servers, which delivers massive leaps in performance and value compared with CPU-based systems.

Demand for supercomputing cycles is higher than ever. The majority of scientists are unable to secure adequate time on supercomputing systems to conduct their research, based on National Science Foundation data1. In addition, high performance computing (HPC) technologies are increasingly required to power computationally intensive deep learning applications, while researchers are applying AI techniques to drive advances in traditional scientific fields.

The Tesla P100 GPU accelerator for PCIe meets these computational demands through the unmatched performance and efficiency of the NVIDIA Pascal™ GPU architecture. It enables the creation of “super nodes” that provide the throughput of more than 32 commodity CPU-based nodes and deliver up to 70 percent lower capital and operational costs2.

“Accelerated computing is the only path forward to keep up with researchers’ insatiable demand for HPC and AI supercomputing,” said Ian Buck, vice president of accelerated computing at NVIDIA. “Deploying CPU-only systems to meet this demand would require large numbers of commodity compute nodes, leading to substantially increased costs without proportional performance gains. Dramatically scaling performance with fewer, more powerful Tesla P100-powered nodes puts more dollars into computing instead of vast infrastructure overhead.”

The Tesla P100 for PCIe is available in a standard PCIe form factor and is compatible with today’s GPU-accelerated servers. It is optimized to power the most computationally intensive AI and HPC data center applications. A single Tesla P100-powered server delivers higher performance than 50 CPU-only server nodes when running the AMBER molecular dynamics code3, and is faster than 32 CPU-only nodes when running the VASP material science application4.

Later this year, Tesla P100 accelerators for PCIe will power an upgraded version of Europe’s fastest supercomputer, the Piz Daint system at the Swiss National Supercomputing Center in Lugano, Switzerland.

“Tesla P100 accelerators deliver new levels of performance and efficiency to address some of the most important computational challenges of our time,” said Thomas Schulthess, professor of computational physics at ETH Zurich and director of the Swiss National Supercomputing Center. “The upgrade of 4,500 GPU accelerated nodes on Piz Daint to Tesla P100 GPUs will more than double the system’s performance, enabling researchers to achieve breakthroughs in a range of fields, including cosmology, materials science, seismology and climatology.”

The Tesla P100 for PCIe is the latest addition to the NVIDIA Tesla Accelerated Computing Platform. Key features include:

-

Unmatched application performance for mixed-HPC workloads — Delivering 4.7 teraflops and 9.3 teraflops of double-precision and single-precision peak performance, respectively, a single Pascal-based Tesla P100 node provides the equivalent performance of more than 32 commodity CPU-only servers.

-

CoWoS with HBM2 for unprecedented efficiency — The Tesla P100 unifies processor and data into a single package to deliver unprecedented compute efficiency. An innovative approach to memory design — chip on wafer on substrate (CoWoS) with HBM2 — provides a 3x boost in memory bandwidth performance, or 720GB/sec, compared to the NVIDIA Maxwell™ architecture.

-

Page Migration Engine for simplified parallel programming — Frees developers to focus on tuning for higher performance and less on managing data movement, and allows applications to scale beyond the GPU physical memory size with support for virtual memory paging. Unified memory technology dramatically improves productivity by enabling developers to see a single memory space for the entire node.

-

Unmatched application support — With 410 GPU-accelerated applications, including nine of the top 10 HPC applications, the Tesla platform is the world’s leading HPC computing platform.

Tesla P100 for PCIe Specifications

-

7 teraflops double-precision performance, 9.3 teraflops single-precision performance and 18.7 teraflops half-precision performance with NVIDIA GPU BOOST™ technology

-

Support for PCIe Gen 3 interconnect (32GB/sec bi-directional bandwidth)

-

Enhanced programmability with Page Migration Engine and unified memory

-

ECC protection for increased reliability

-

Server-optimized for highest data center throughput and reliability

-

Available in two configurations:

-

16GB of CoWoS HBM2 stacked memory, delivering 720GB/sec of memory bandwidth

-

12GB of CoWoS HBM2 stacked memory, delivering 540GB/sec of memory bandwidth

-