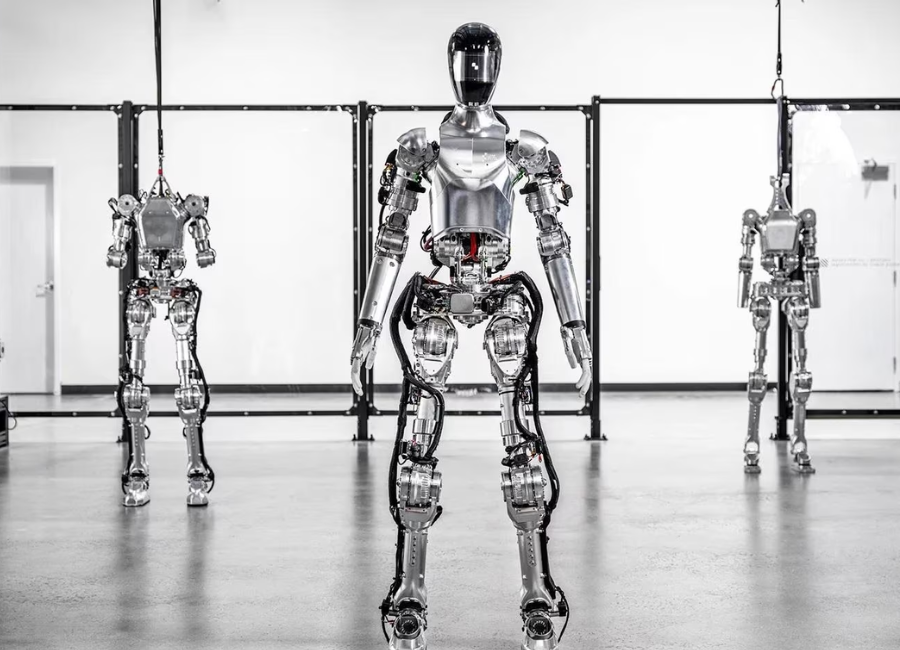

Figure, a robotics developer, has introduced a humanoid robot infused with advanced technology from OpenAI, allowing it to engage in real-time conversations and perform tasks simultaneously, according to reports on Wednesday. The company showcased a video demonstration of its latest creation, Figure 01, interacting with its Senior AI Engineer, Corey Lynch, in a mock kitchen setting.

Through its partnership with OpenAI, Figure has equipped its robots with high-level visual and language intelligence, enabling them to comprehend and respond to human interactions in real time. In the video shared on Twitter, Figure 01 demonstrates its ability to identify objects like apples, dishes, and cups, as well as carry out tasks such as collecting trash into a basket while engaging in conversation with Lynch.

Lynch provided further insights into the Figure 01 project, explaining that the robot can describe its visual experiences, plan future actions, reflect on its memory, and explain its reasoning verbally. The robot’s capabilities are powered by feeding images from its cameras and transcribing speech from onboard microphones into a large multimodal model trained by OpenAI. This multimodal AI can understand and generate different data types, including text and images.

Figure 01’s behavior is learned rather than remotely controlled, Lynch emphasized. The model processes the entire conversation history, including past images, to generate language responses, while also deciding which learned behavior to execute on the robot to fulfill commands.

The robot is designed to provide concise descriptions of its surroundings and apply “common sense” for decision-making, such as inferring that dishes should be placed in a rack. It can also interpret vague statements, like hunger, and take appropriate actions, such as offering an apple, all while explaining its actions.

The debut of Figure 01 garnered significant attention on Twitter, with many expressing amazement at its capabilities and some humorously referencing sci-fi movies like Terminator. Lynch also shared technical details for AI developers and researchers interested in the project, highlighting the neural network visuomotor transformer policies driving the robot’s behaviors.

Figure 01’s introduction comes amid ongoing discussions among policymakers and global leaders about the integration of AI tools into various industries. While much attention has been on large language models, developers are also exploring ways to incorporate AI into physical humanoid robotic bodies, with the aim of enhancing productivity and compatibility, particularly in sectors like space exploration.

Figure AI and OpenAI have not yet responded to requests for comment on the development. However, Lynch expressed astonishment at the rapid progress in AI and robotics, noting that conversing with a humanoid robot capable of planning and executing tasks autonomously was once thought to be a distant possibility.

In summary, Figure’s introduction of Figure 01, a humanoid robot infused with OpenAI technology, marks a significant advancement in the field of robotics and AI. The robot’s ability to engage in real-time conversations and perform tasks simultaneously showcases the potential of integrating advanced AI into physical robotic bodies, opening up new possibilities for applications in various industries.